How to Run Small Experiments to See If AI Overviews Cite Your Content

Will AI Cite You? How to Run Small, Simple Experiments to Find Out

You've read the guides. You've tweaked your content, added FAQs, beefed up your author bios, and sprinkled in expert quotes. You hit "update," cross your fingers, and wait for Google's AI Overviews to recognize your brilliance.

But when you check the search results a week later, you're left with a familiar, nagging question: Did any of that actually work?

If you change ten things at once, you can’t know which one made the difference. It’s like throwing spaghetti at a wall and hoping some of it sticks—messy, inefficient, and you're never quite sure why it worked when it does.

Welcome to the new frontier of search. Getting noticed by AI isn't about guesswork; it's about intelligence. And the best way to gather that intelligence is by thinking less like a marketer throwing everything at the wall and more like a scientist running a clean, simple experiment.

The New Game: Why "Getting Cited" Is More Important Than Ranking

For years, the goal was simple: get the #1 spot on Google. But AI Overviews (AIOs) have changed the game. These AI-generated summaries appear at the very top of the search results, answering the user's question directly.

While this is great for users, it creates a new challenge for creators. Your goal is no longer just to rank; it's to have your content selected, synthesized, and cited as a source within that AI-generated answer.

Why? Because that citation is the new top spot. It's a direct link to your site, positioned as a trusted source by Google's own AI.

Many experts suggest that optimizing for AI is just an extension of good SEO. And they're partially right. Things like clear writing, site authority, and providing genuine value still matter immensely. But the AI models that build these overviews don't "read" and "rank" content in the same way traditional algorithms do. They look for specific signals—like concise definitions, structured data, and clear evidence of expertise—to assemble their answers. This is the core of a new field called [Generative Engine Optimization]([Link to Fonzy.ai's guide on "What is Generative Engine Optimization?"]), which focuses on making content easy for AI to understand and use.

The problem is, with so many potential signals, how do you know which ones to focus on for your content and your audience?

From "Spray and Pray" to Proof: Adopting an Experimental Mindset

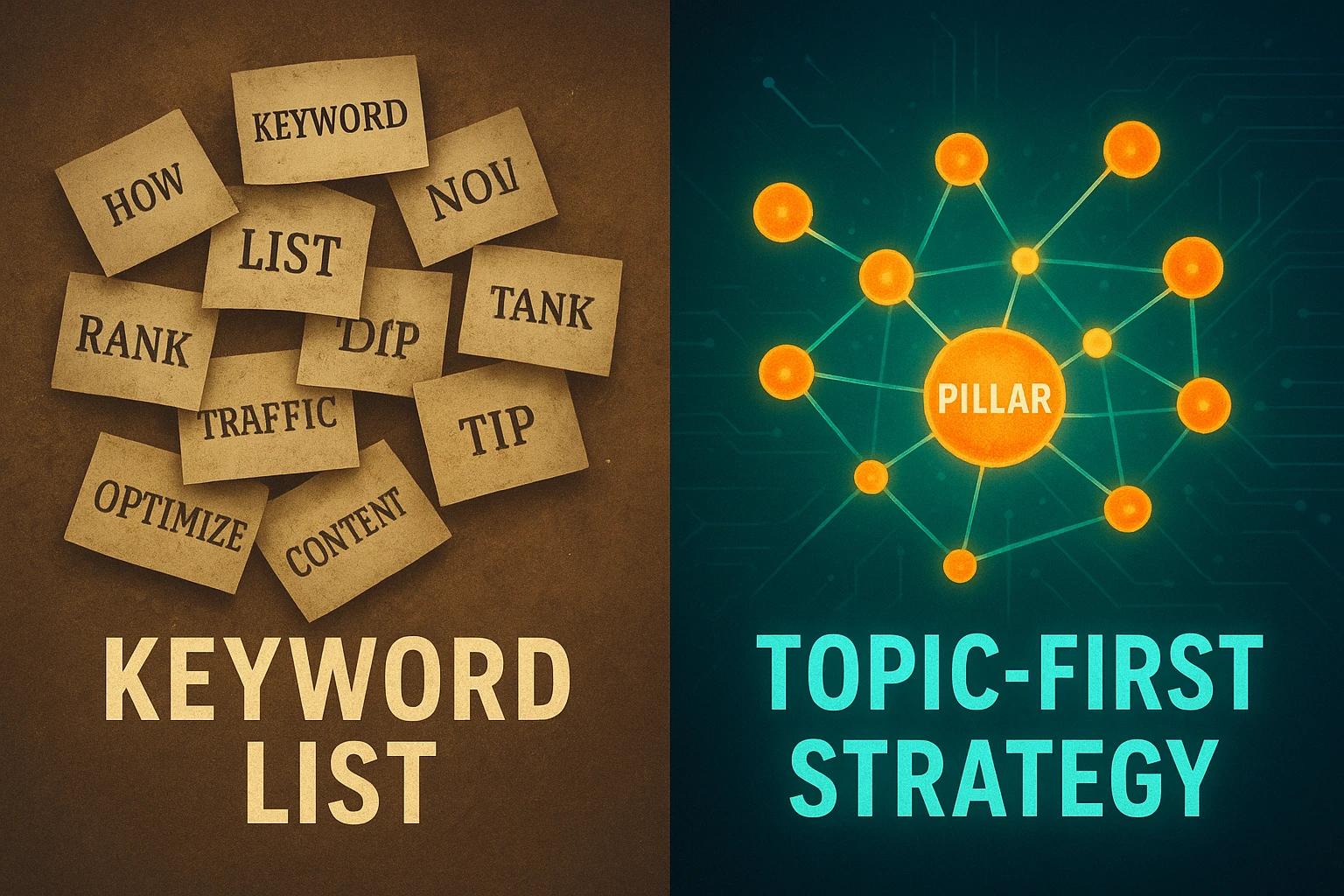

The common approach to AI Overview optimization has become a "spray and pray" checklist. Write longer content. Add more schema. Improve [The Role of E-E-A-T in the Age of AI Search]([Link to Fonzy.ai's article on "The Role of E-E-A-T in the Age of AI Search"]). While this is all good advice, it doesn't give you proof. You're left without a clear understanding of cause and effect.

Instead, let's borrow a page from the scientific method. By running small, controlled A/B experiments, you can test specific changes and see exactly how they impact your chances of being cited. This isn't about overhauling your entire site; it's about making one tiny, measurable change to a single piece of content to see what happens.

This approach gives you something invaluable: proof. Proof that you can use to build a data-driven strategy, get buy-in from your team, and confidently scale what works.

[Image: Diagram illustrating the A/B testing process for AI Overviews, showing an original content piece and a modified version with a clear variable change, leading to a hypothetical AIO citation.]

Your First Small Experiment: A 4-Step Framework

Ready to stop guessing? Here’s a simple framework, inspired by the structured processes used in data science research, to run your first low-risk experiment.

Step 1: Form a Clear Hypothesis

A hypothesis is just an educated guess you want to test. It should be simple and follow an "If I do X, then Y will happen" format.

Start by picking one article that targets a query known to generate AI Overviews. Then, form your hypothesis.

Examples of a good hypothesis:

- "If I add a concise, two-sentence summary in bold at the top of my article on 'what is a variable rate mortgage,' then it will be more likely to be cited in the AI Overview for that query."

- "If I reformat my list of 'best dog training tips' from a paragraph into a numbered list, then the AI Overview is more likely to pull from that list."

- "If I add a small FAQ section with 3-4 direct questions and answers at the end of my blog post, then one of those answers will be cited by the AI."

The key is to be specific. Don't just say "improve the content." State exactly what you'll change.

Step 2: Isolate a Single Variable

This is the most crucial step. Change only one thing. If you change the introduction, add a numbered list, and embed a new video, your results will be meaningless. You won't know which element caused the change (or lack thereof).

Your original, unchanged article is your "control." The new version with your single change is your "test."

Simple variables to test first:

- A concise definition: Add a clear, simple definition of the main topic right at the beginning.

- A summary box: Create a "Key Takeaways" or "In a Nutshell" box near the top.

- Rephrasing a key sentence: Tweak the wording of a critical point to be more direct and factual.

- Structural changes: Convert a paragraph into a bulleted or numbered list.

- Bolding: Make a key phrase or answer sentence stand out by bolding it.

Step 3: Track and Observe Over a Short Timeline

You don't need fancy software to start. A simple spreadsheet will do.

- Log Your Change: Note the URL, the date, the exact change you made (your hypothesis), and take a screenshot of the "before."

- Set a Timeline: Give it 2-4 weeks. AI models and search results need time to process changes.

- Check Regularly: Once or twice a week, perform the target search query (ideally in an incognito browser to get unbiased results). Document what you see. Is there an AI Overview? Does it cite your page? Take a screenshot.

Step 4: Analyze and Iterate

After a few weeks, look at your log.

- Did you get cited? Amazing! You have a validated hypothesis. Now you can consider applying that same change to other relevant articles.

- Did nothing happen? That’s not a failure—it's data! Your hypothesis was proven incorrect, which is just as valuable. You now know that specific change wasn't a strong enough signal. You can revert the change and test a new hypothesis.

- Did a competitor get cited? Excellent! Analyze their content. What format are they using? Can you form a new hypothesis based on their success?

The goal of each small experiment is to learn one new thing. Over time, these small learnings compound into a powerful, proven strategy for getting your content noticed.

From Experiment to Strategy

Once you've run a few small experiments and have proof of what works, you can start scaling. The insights you gain from testing a single variable on one page can inform your entire content plan. You'll move from making random tweaks to implementing a repeatable, data-backed process.

This is the foundation for a truly effective content machine. When you know which specific formats and signals work for your niche, you can begin exploring ways to apply them consistently across your site. For many, this is where they start looking into [automating their content strategy with AI]([Link to Fonzy.ai's blog post on "Automating Your Content Strategy with AI"])-powered tools to implement these proven tactics at scale.

Frequently Asked Questions

What are AI Overviews again?

AI Overviews are AI-generated answers that appear at the top of some Google search results. They synthesize information from multiple web pages to provide a direct, comprehensive answer to a user's query, with citations linking back to the original sources.

How is optimizing for AI Overviews different from traditional SEO?

Traditional SEO focuses heavily on ranking factors like keywords, backlinks, and technical site health to get your entire page to the top. Optimizing for AIOs is about making specific snippets of your content—like a definition, a list, or a key stat—so clear and authoritative that AI chooses to include it in its generated summary.

How long should I run an experiment?

For a single content change, 2-4 weeks is a reasonable timeframe to see if it gets picked up. Search engines need time to recrawl your page and for the AI models to potentially incorporate the new information.

What if my experiment shows no change at all?

That is still a successful experiment! You've learned that the variable you tested wasn't a strong enough signal to influence the AI. This valuable insight saves you from wasting time applying that change across dozens of other pages. Simply document the result, revert the change if you wish, and move on to your next hypothesis.

Where can I get ideas for what variables to test?

Start by looking at the AI Overviews that already exist for your target topics. Who is being cited? How is their information formatted? Do they use lists, tables, or bolded definitions? Use the current winners as inspiration for your next hypothesis.

Roald

Founder Fonzy — Obsessed with scaling organic traffic. Writing about the intersection of SEO, AI, and product growth.

Stop writing content.

Start growing traffic.

You just read about the strategy. Now let Fonzy execute it for you. Get 30 SEO-optimized articles published to your site in the next 10 minutes.

No credit card required for demo. Cancel anytime.

Topic-First Content Strategy for AI-Driven Search

Discover why focusing on topics over keywords drives SEO success in AI-powered search environments.

How to Audit SEO for Automation Opportunities

Discover a simple non-technical checklist to identify SEO tasks you can automate and save time for strategic work.

5 Signs Your Content Workflow Is Blocking Growth

Discover common content workflow problems slowing your growth and how automation can help improve efficiency and results.